Project Title: InGlove

Project Team: Vedant Agrawal and Shruti Nambiar

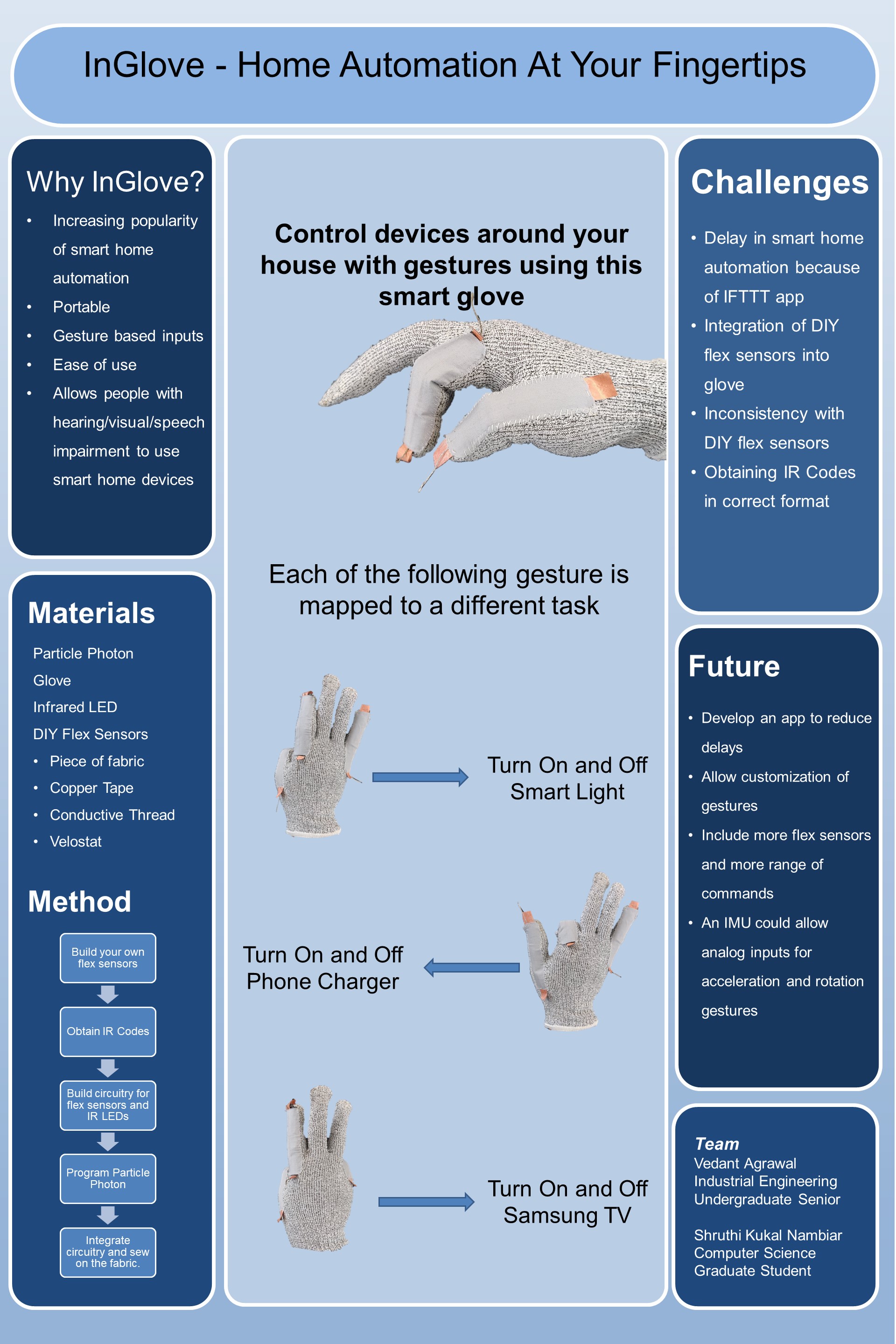

Project One Liner: Smart glove that helps the user control their TV and smart switches/lights using hand gestures

Video:

Poster:

Describe what your project does and how it works

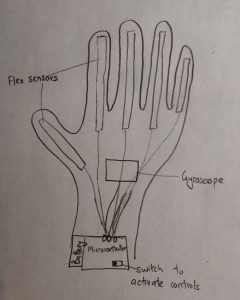

This is a smart glove that helps the user control smart (WiFi connected) or Infrared (TVs, Music Receiver) devices in their home. The glove has fabric flex sensors integrated onto the fingers and which allow the Particle Photon microcontroller to recognize when the user has flexed their fingers by making specific hand gestures. For IR devices, the microcontroller sends out the IR signal using an IR LED transmitter circuit integrated onto the glove. For smart devices, the microcontroller publishes an event on the Spark cloud via WiFi. Then, IFTTT, a web-based app gateway service, recognizes the published event, and tells the smart device app to execute the command respective to the gesture made.

The glove is meant to target users with physical disabilities that find it difficult to move around their house to control electronic devices in their homes, as well as for users with speaking disabilities that cannot necessarily communicate with their Google Home/Alexa to control their smart devices.

Describe your overall feelings on your project. Are you pleased, disappointed, etc.?

We started out with the goal of building a gesture based alternative to a Google Assistant. We are happy that we did indeed build a prototype that accepted gesture based inputs to control smart home devices. However, there was indeed quite some room for improvement. We spent a large part of our time on coding and technical aspect and less on the assembly and design integration of the circuits into our glove.

Describe how well did your project meet your original project description and goals.

Our original goal was to build a gesture based home automation glove. I believe we did a good job in establishing our initial goals but more as a proof of concept than as a finished product. We showed that it was very well possible to connect wearable fabrics over WiFi to smart devices and control several devices at a time using gestures. However, there was room for improvement in terms of consistency of results. We had a tough time with our IR circuit and it was only working intermittently. We could also have done a better of job of the integration of these components into the fabric.

Describe the largest hurdles you encountered. How did you overcome these challenges?

One of the earliest challenge we were faced with were the flex sensors itself. We first bought a commercial long and short flex sensor from Adafruit. However the commercial flex sensors costed 12$ a piece and were made of a non-flexible plastic like material. It was difficult to tack it down with the fabric and have it follow the movement of the fabric. We then figured out how to build our own DIY flex sensors with Velostat and electrical tape. This costed us less than a few cents a piece. However, the electric tape did not adhere well to the material of our fabric either. After discussing with Marianne, we figured we could try building flex sensors with fabric!!! And it worked.

Once we did this our next challenge was to have the flex sensor communicate with the smart device. We used a Particle Photon as a microcontroller. There was a small learning curve to understanding how the Particle worked. Once we figured that out, we integrated it to the smart sensor using a gateway application called IFTTT. However, since IFTTT is a free platform, guarantee of service was about once a minute since they had to cater to a larger market. This meant that when the Particle Photon would publish an event onto the Spark cloud when a finger was flexed, the IFTTT app would only check for this event once per minute, causing there to be a large delay between the finger flex and the smart light turning on. We tried to work around this by building our own app that could read from Spark cloud of the particle servers. But the android API integration wasn’t very well documented and the support from online communities were low. So we decided to stay with IFTTT as a service.

Another challenge we faced generally throughout the project was hardware debugging. Since neither of our backgrounds involved a strong base in embedded systems and electronics, we had a slightly tough time with it. Trying to integrate and assemble all of the components onto the glove was also quite challenging since we had to ensure that there are no cross connections and short circuits and that all the wires are tacked down to make perfect and consistent contact. However, over the course of the semester, we became better at hardware debugging and we began to understand what to look for and how to diagnose the circuit problems better.

Describe what would you do next if you had more time

Since neither of us had experience with app development, we spent a lot of time trying to figure out how to make an app that connects to the Spark cloud to read a published event from the Particle, and then also connect to the smart switch/light app that controls the smart device. We did figure out that those two functions have been done independently, but not in the same app (except for IFTTT). Thus, we would use the extra time to look into how the app would be developed for this glove, to reduce the delay between the finger flex and the smart light turning on/off.

We would also like to integrate more flex sensors to capture a wider range gestures, and thus commands. Additionally, we would use that time to better integrate the circuits we have into the glove, to make it comfortable, yet functional.

Materials Used:

- 1 x Thin Glove

- 1 x Particle Photon

- 3 x DIY Fabric Flex Sensors:

i. Velostat – Force-sensitive fabric

ii. Conductive Thread

iii. Copper Tape

iv. Pieces of thin cotton fabric - 1 x Infrared LED

General Electrical Components (wires, resistors, general purpose transistor)