PROJECT POST #8

Project Title: Vis Hat

Project Team: Lydia, Fu, Jay

Sentence Description:

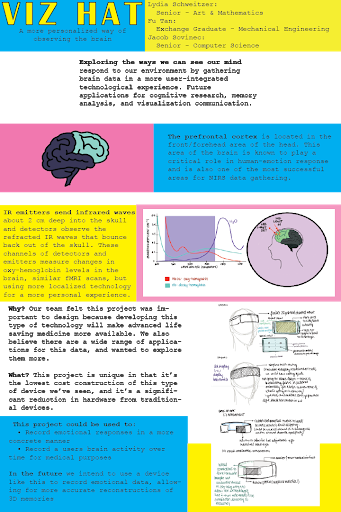

Exploring the ways in which we can see our mind respond to our environment by gathering brain data in a more user-integrated technological experience.

Video:

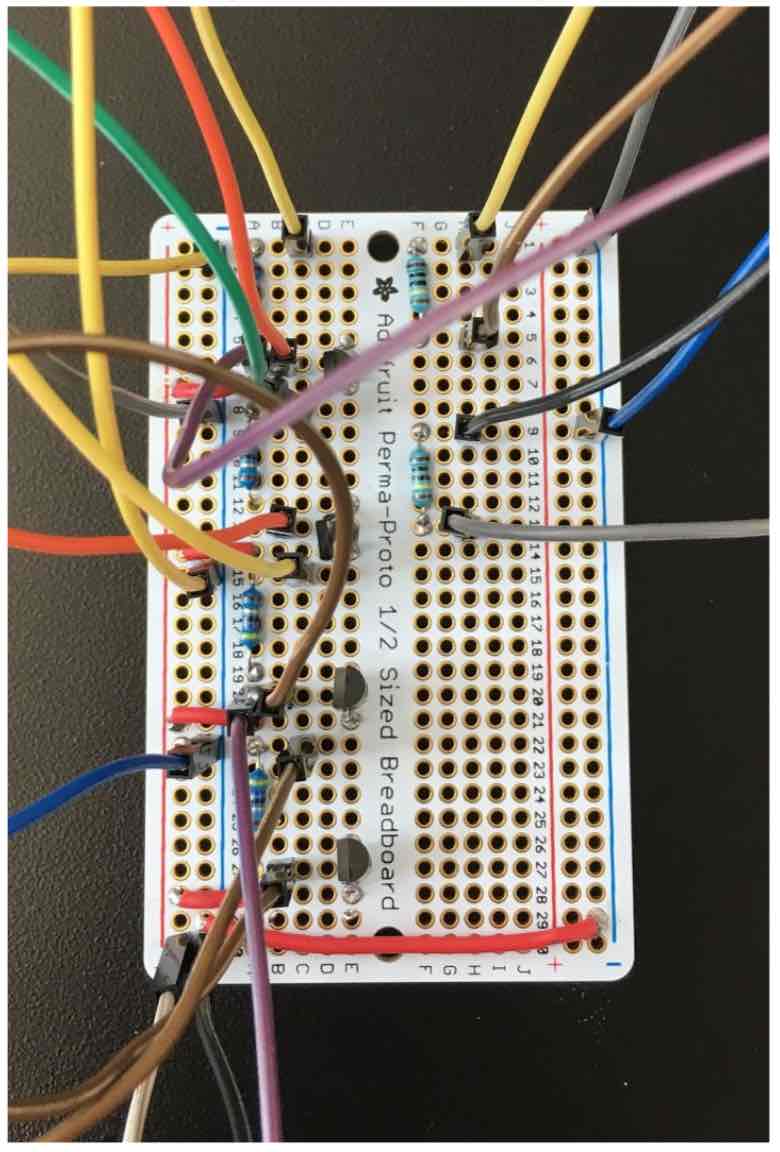

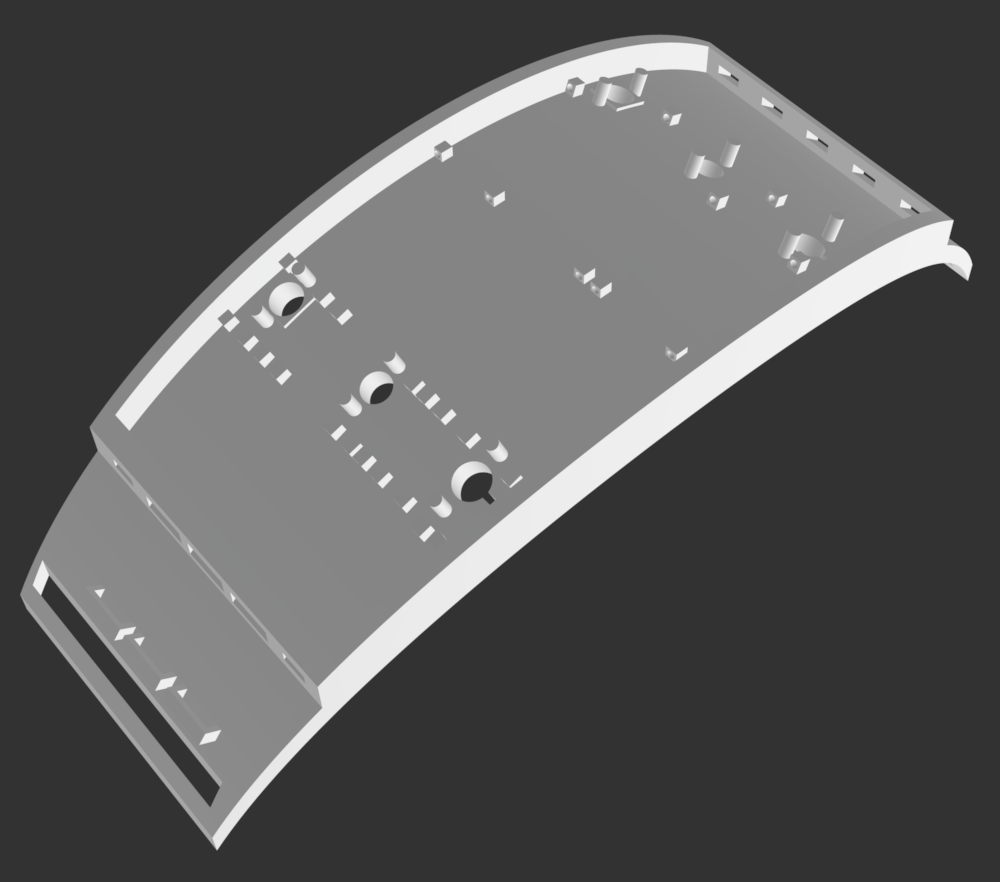

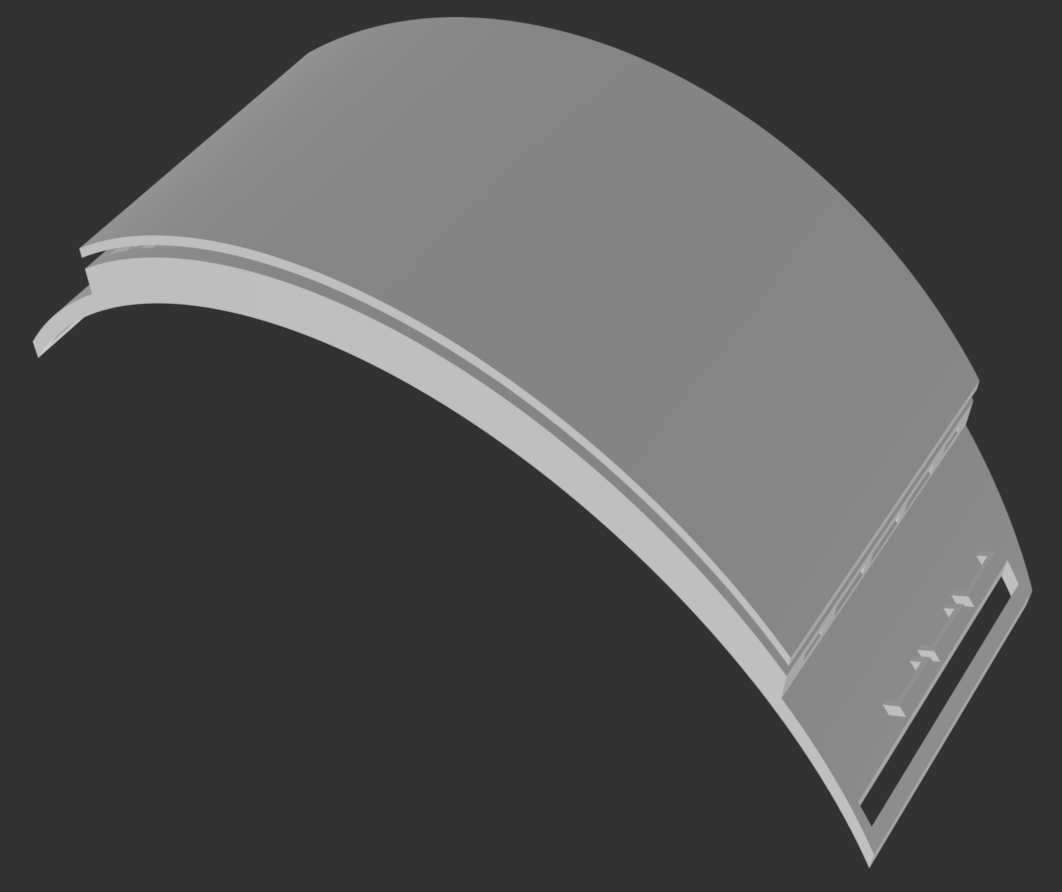

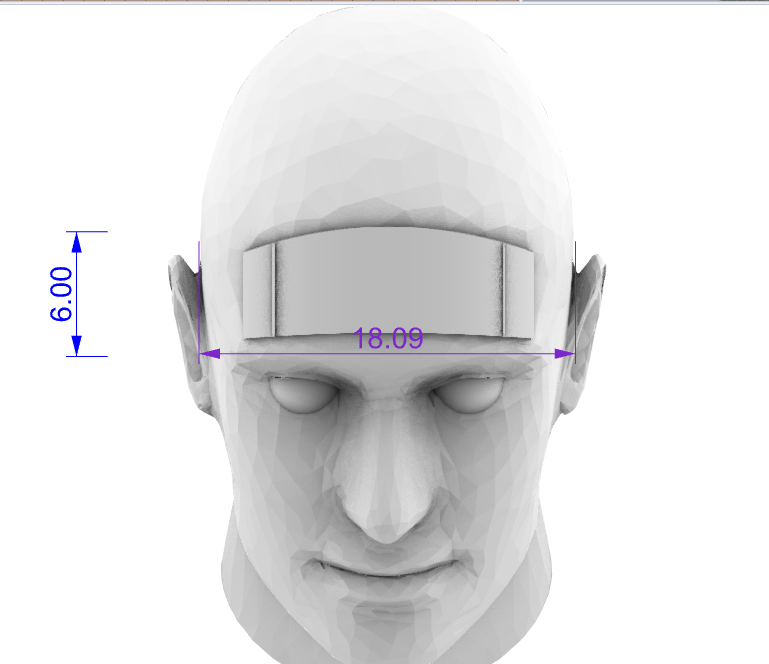

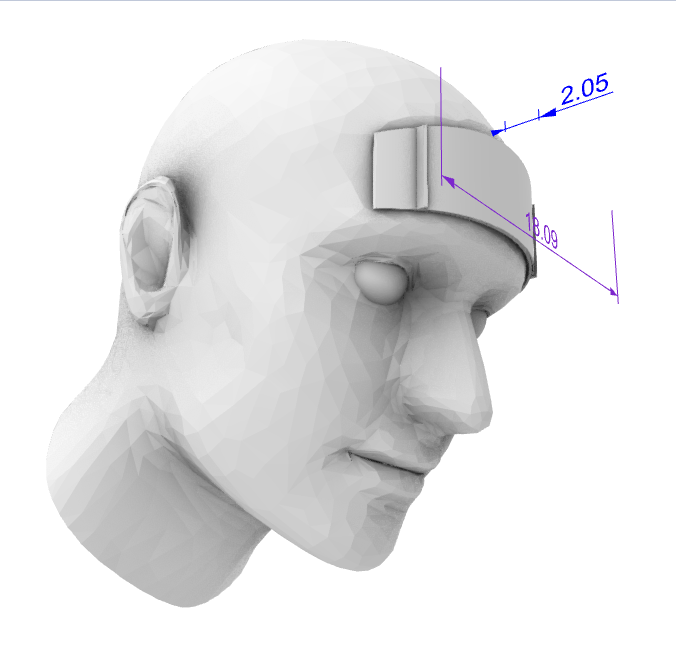

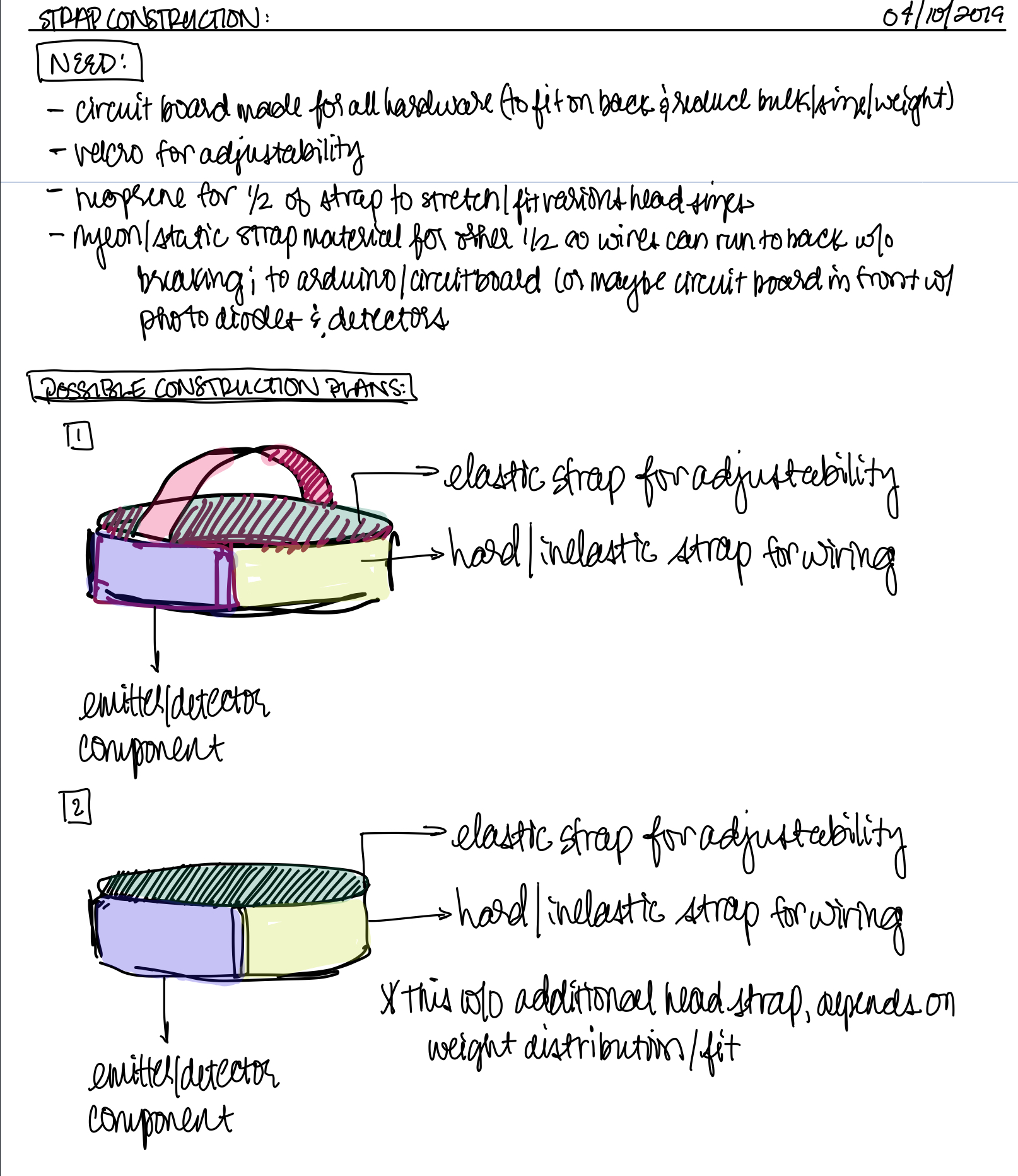

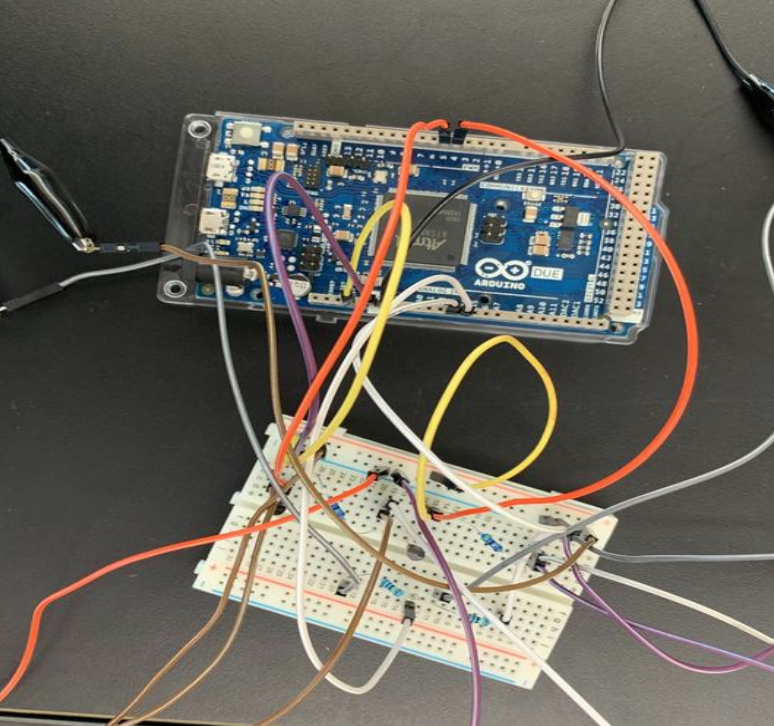

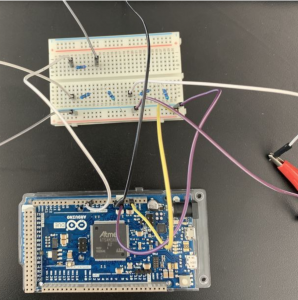

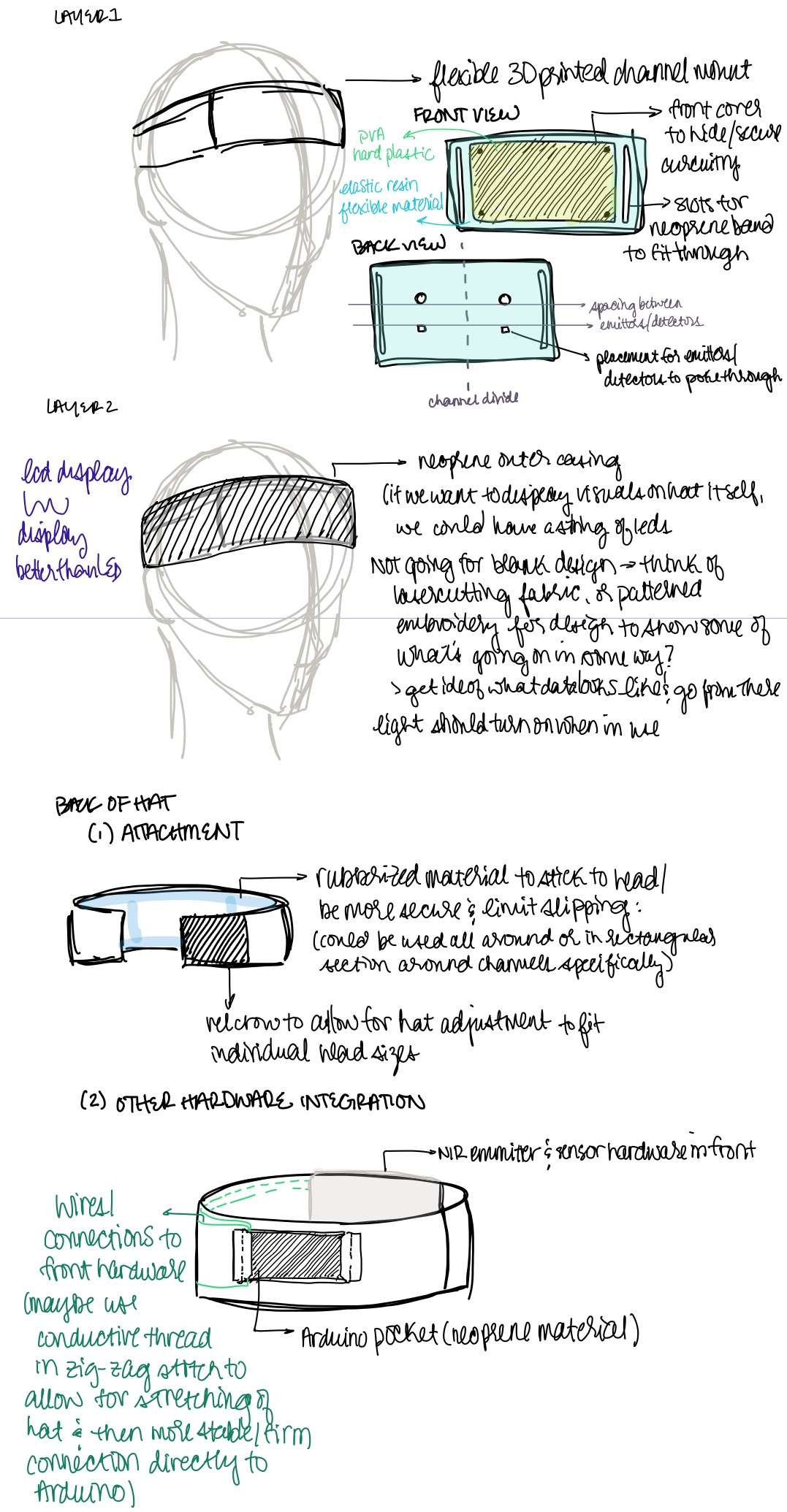

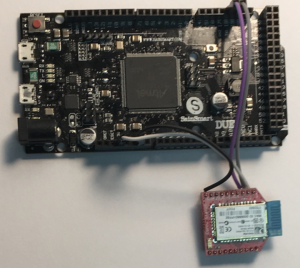

Description: All IR emitter and photodiode detector hardware is built into the front 3D-printed plastic compartment. The compartment is attached to a static strap which leads all wires from the front component to the back of the strap near the back of the head. Wires are attached to this strap via zig-zag stitching. The hardware in the back includes a protoboard with all wire connections to the emitter/detector setup and the Arduino Due which is used to transfer data via a USB cable to a computer. These components are housed in a neoprene pocket with drawstrings to close off visible wires. The other strap is neoprene and flexible to allow for different adjustments for various head shapes/sizes and is attached to the end of the static strap with velcro.

Video:

Poster Image:

Project Description and Function:

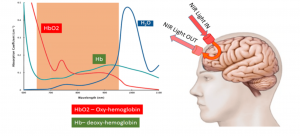

The prefrontal cortex is located in the front/forehead area of the head. This area of the brain is known to play a critical role in human-emotion response and is also one of the most successful areas for NIRS data gathering.

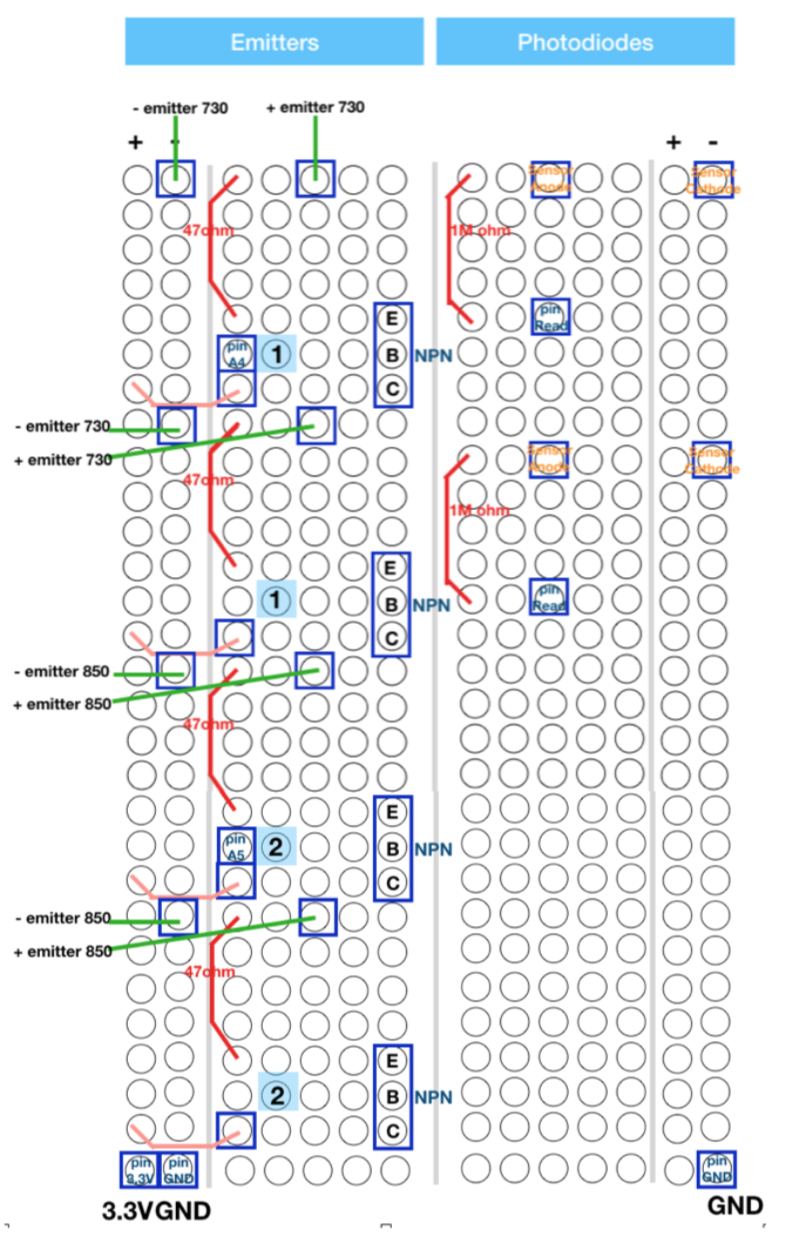

IR emitters send infrared waves about 2 cm deep into the skull and detectors observe the refracted IR waves that bounce back out of the skull. These channels of detectors and emitters measure changes in oxy-hemoglobin levels in the brain, similar fMRI scans, but using more localized technology for a more personal experience.

Data is visualized in live time using Unity 3D/VR software, corresponding to an increase in activity related to heat/light. These heat and light changes relate to activity changes detected from the forehead/skull/brain within 2 cm deep from the surface mount.

Future applications for cognitive research, memory analysis, and visualization communication.

Project Feelings/Evaluation:

Considering our prior knowledge in all related subjects, we feel accomplished with our final product. We each, in our own ways, dove deeply into academic research outside our own fields, and brought those conclusions together to create something that accomplished our goal: to manipulate a virtual environment using data from the head. We’re each proud of our own work put towards the project, and each others.

Goal Meeting Description:

Our initial goal of coming up with a more wearable device that could monitor activity level changes near/around certain parts of the head for the purposes of visualization was definitely met. Because of the level of complexity associated with high-quality material sourcing and the accuracy expectations that come with building a similar device within a medical environment, we were unable to use the device with that same level of sensitivity. Overall this was a great learning process for us all and given all we discovered during the process, our final result definitely exceeded our expectations of our capabilities given our experience and time-frame.

Hurdles Encountered and Overcoming Challenges:

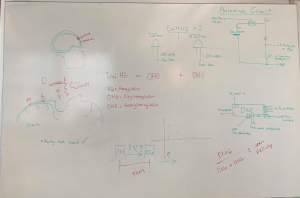

- Lack of knowledge designing hardware

- Lack of knowledge regarding brain physiology

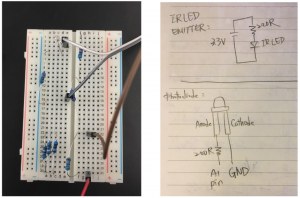

The ways that we overcame the hardware challenges are through steps by steps, we first tested all the components we need and make sure our proof of concept does work. Then we tried to create the first version of the circuit and discussed about the VIS HAT design. After all that testing process, we felt more confident about integrating all components together. Eventually, we think our project hardware design turns out to be pretty nice and satisfying.

As to the brain physiology part, we spent a lot of time doing research and reading through a bunch of relative papers in order to know from basic brain knowledge and gradually know how we are going to build our VIS HAT, which also sort of relates to designing hardware.

Approach if we were to do it again/With more time:

With more time, we believe that we all want to spend more time on testing our VIS HAT and get a great amount of data from it. By doing so, we think we can definitely play with the data and analysis the data. With the data we get, we can surely define human brain activities and do some classification about it, which would be great that we show our VIS HAT functionality demo with the brain activities.

Final Materials:

| title/link | # needed | price/unit | notes | shipping and tax | TOTAL PRICE: |

| arduino due | 1 | 34.43 | 34.43 | ||

| NPN transistor | 1 | 5.99 | this one unit contains ~200 resistors | 5.99 | |

| 730nm emitter | 2 | 8.61 | 9.54 | 26.76 | |

| 850nm emitter | 2 | 1.46 | 2.92 | ||

| 850 max nir detector/sensor | 2 | 5.37 | 9.02 | 19.76 | |

| bluetooth | 1 | 28.95 | RN41XVC (with chip antenna) | 8.81 | 37.76 |

| 850nm emitter | 2 | 1.46 | To account for trial and error hardware testing | 7.99 | 9.45 |

| 9 volt battery power supply adapter | 1 | 5.99 | 5.99 | ||

| 3d print in elastic material | 1 | 0 | 0 |