Weekly accomplishments:

Curt:

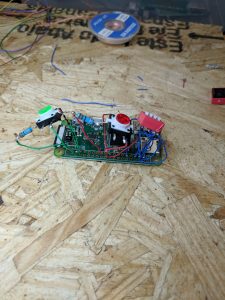

This week I started 3D printing the finger. The servo mountings were printed along with the fingertip. However, the printer broke so I was unable to print the hinges and finger interconnect pieces. I am going to be reaching out to the library for printing if the 3D printer I was using is still broken. On another note, the finger construction is proceeding with fair results for a first prototype. I have been working on preparing the parts to screw into each other. In the next week I plan on printing the rest of the finger and get the initial prototype constructed. I will also be shopping for a wrist / hand brace that I can use for the purposes of providing a soft yet sound base for the finger.

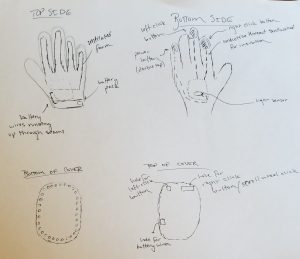

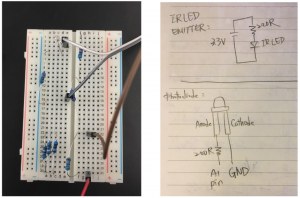

I have also pursued the custom flex sensor that Shruthi and Vedant worked on last week. Specifically, I have produced two flex sensors joined by a central node in order to capture flex for two different joints in a finger. My next steps on this portion are to purchase a glove liner, construct flex sensors for fingers, sew on flex sensors, and start capturing hand data.

For the algorithm to convert gesture into finger joint position, I still need to work out the specifics. To discuss this, I first must detail the two applications of the finger that I have in mind. The two applications are an assistive grasping device and a hand-remapping device. For an assistive grasping device, as labeled cluster may be enough to understand what the intention is, then using that as a look up for rough location of the thumb. For example, open hand will cause full open of finger, full close will result in finger being fully bent. It is not entirely clear on the mapping for other gestures but using Asada’s previous work, I would constrain the clustering to the thumb and first two fingers on the user’s hand. An alternate approach is to attempt full hand clustering and augment the predicted values with the artificial synergies from Asada’s work. As for hand remapping this, whereby the thumb and first two fingers are considered a distinct hand from the last two fingers and SRF thumb, I plan on using artificial synergies as a direct signal to the SRF digit.

Future work using human computation to help define the gesture to finger data and future work on a physical therapy procedure for hand remapping is needed but will not be explored by end of course.

Vedanth:

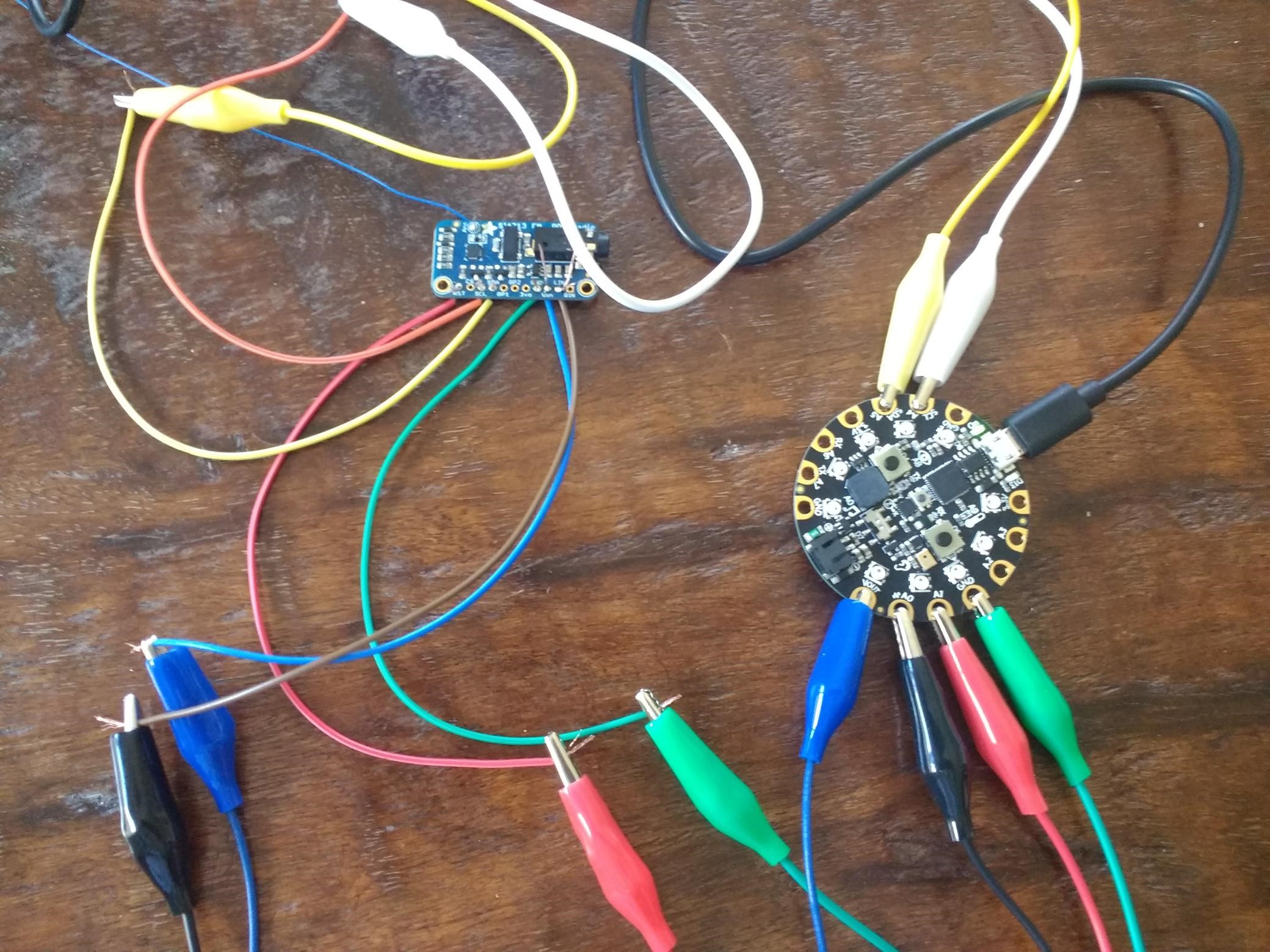

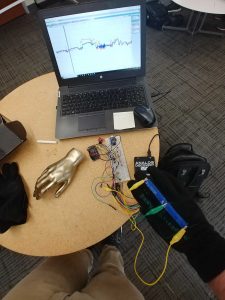

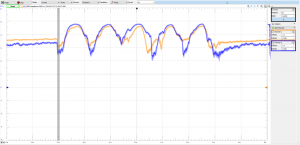

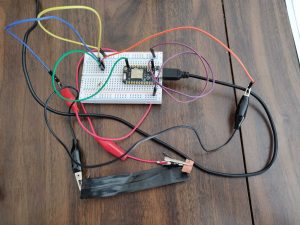

This week we did some more testing with the DIY and the Adafruit flex sensors. We realized the two have very different resistances, so we needed different resistors in our circuit to test them. I was also able to use the Particle and connect it to the IFTTT app (which connects to smart switches). We were able to write a program that publishes an ‘event’ when the flex sensor is bent, which is then read by IFTTT, which then indicated the smart plug app turn the smart plug on. However, there was a large delay during this process, as the IFTTT app checks for the published event only once every minute. So we are starting to look into other options, such as making our own Android app which transmits the information from the published event to the smart plug app. I am looking into how the Particle will send/receive information from the Android app directly.

Shruthi:

This week me and Vedant worked on trying to build a pipeline from the flex sensor to end devices using IFTTT. We did manage to successfully connect sensor data to a smart plug via IFTTT and a smart plug app. However, what we noticed was that this pipeline requires multiple reads and pings to the server and there was no guarantee of immediate service. This implied that the latency between a flex and the task was high. We went ahead to debug the what is causing the lag and realized that although particle photon uploads data to the cloud pretty quickly, IFTTT was slow in reading data from the particle servers. Another constraint was that IFTTT does not allow communicating to the google assitant, however it allows communication from the google assistant(Which is not what we want). Customization on IFTTT is very restricttive and hence we plan to use the cloud API exposed by particle photon and to build our own android application. This maybe involve bit of a learning curve and I am looking into it.

Materials List

Home Assistant Sub-Project

- Particle Photon – $19.00 (1)

- Flex sensor – 4.5 inches – $12.95 (1)

- Flex sensor – 2.5 inches – $7.95 (1)

*We decided to go ahead with more of the DIY flex sensors. So we might possibly need more velostat and copper sheets and conducting thread.

SRF Sub-Project

Already purchased / owned

- Glove for prototype [final version subject to change based on prototype]

- Sparkfun IMU – $14.95 (1)

- Flex Sensor – 4.5 inches – $15.95 (1)

- ESP32 Dev Board – $15.00 (1)

- High Torque Micro Servo – $9.95 (3)

- Resistive Force Sensor – $7.00 (1)

- Flex sensor – 4.5 inches – $12.95 (1)

Future / After initial prototype

- Resistive Force Sensor – $7.00 (5)

- Flex sensor – 4.5 inches – $12.95 (3) and/or Flex Sensor 2.5 inches – $7.95 (8)

Areas of concern:

For areas of concern, the only major concern right now is time to get everything complete but mainly the algorithm, as I need to understand the signal to noise ratio in my data, the precision of the finger, etc. before I can determine the best course of action